One of the most onerous tasks in 3D printing is the pain-staking process of creating 3D models from scratch. Models can take hours to create even for those with immense expertise in this field of endeavour. Luckily, a group of researchers at MIT have developed an unbelievable new method of synthesising 3D models out of pictures of objects.

3D Generative Adversarial Networks

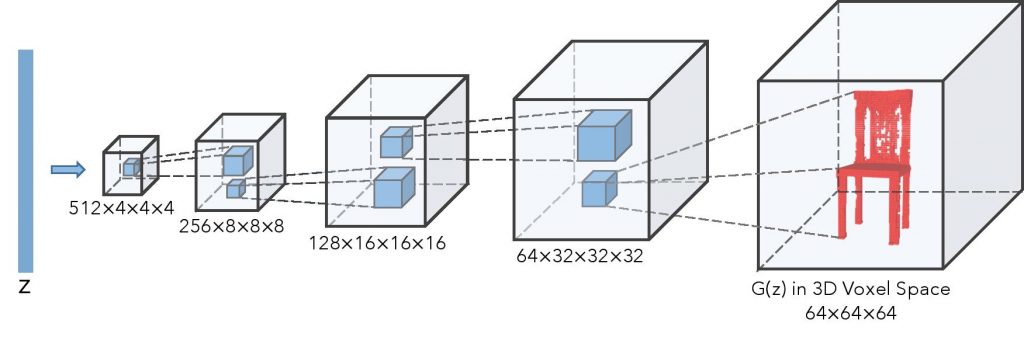

3D Generative Adversarial Networks (3D GAN for short) promises the ability to generate 3D models by taking pictures of whatever it is you want to model. GANs are a subset of generative models that utilise competing software to produce a result. Even though the model produced in these cases is low resolution, it can save precious hours by generating the basic shapes of the object in question. It divides images of household items like a chair, for instance, and converts them into cubic pixels that can be used as an initial place to start designing your model. From that starting point, it can be much easier to chisel down the initial shape into the desired outcome.

How it works

It converts the picture into a usable 3D format by utilizing a network that contains large datasets to compare the picture with. These datasets are kept on a domain for the express purpose of allowing a computer to compare and recreate objects like cars or chairs once it recognises similarities. The datasets act as schemas and the adversarial discriminator compares and contrasts the object whenever someone wishes to obtain a rough 3D model.

Mixing objects

What’s more, the software boasts the ability to mix and match objects of various kinds and derive models between one another. Take two tables and you can arrive and a rough mixture of the two. It can create a synthesis between objects that are unlike each other as well. A video showed a model of a car and that of a boat being merged together.

3D GAN models might make it far easier to be able to obtain printable models out of pictures as well. Imagine this: you’re walking along and you see a car that you might want to reproduce as a 3D print at home. All you have to do is take a picture of it and pass it through the network to get a workable starting point.

The source code is available for use online.

You can read the MIT study here or watch a breakdown of the software’s abilities by Youtube channel ‘Two Minute Papers’ below